Philip LaPorte

mathematics phd candidate, uc berkeley

(2026) PNAS Nexus. PL, L Pracher and S Pal

(2026) Proceedings of the National Academy of Sciences. PL, C Hilbe, NE Glynatsi and MA Nowak

(2023) Journal of the Royal Society Interface. PL and MA Nowak

(2023) PLOS Computational Biology. PL, C Hilbe and MA Nowak

talks and presentations

(2026) AMS Spring Eastern Sectional Meeting

(2025) UC Berkeley Probability Seminar

(2025) AMS New England Graduate Student Conference

(2024) Fu Lab, Dartmouth Mathematics Dept

(2023) Many Cheerful Facts seminar, UC Berkeley

misc

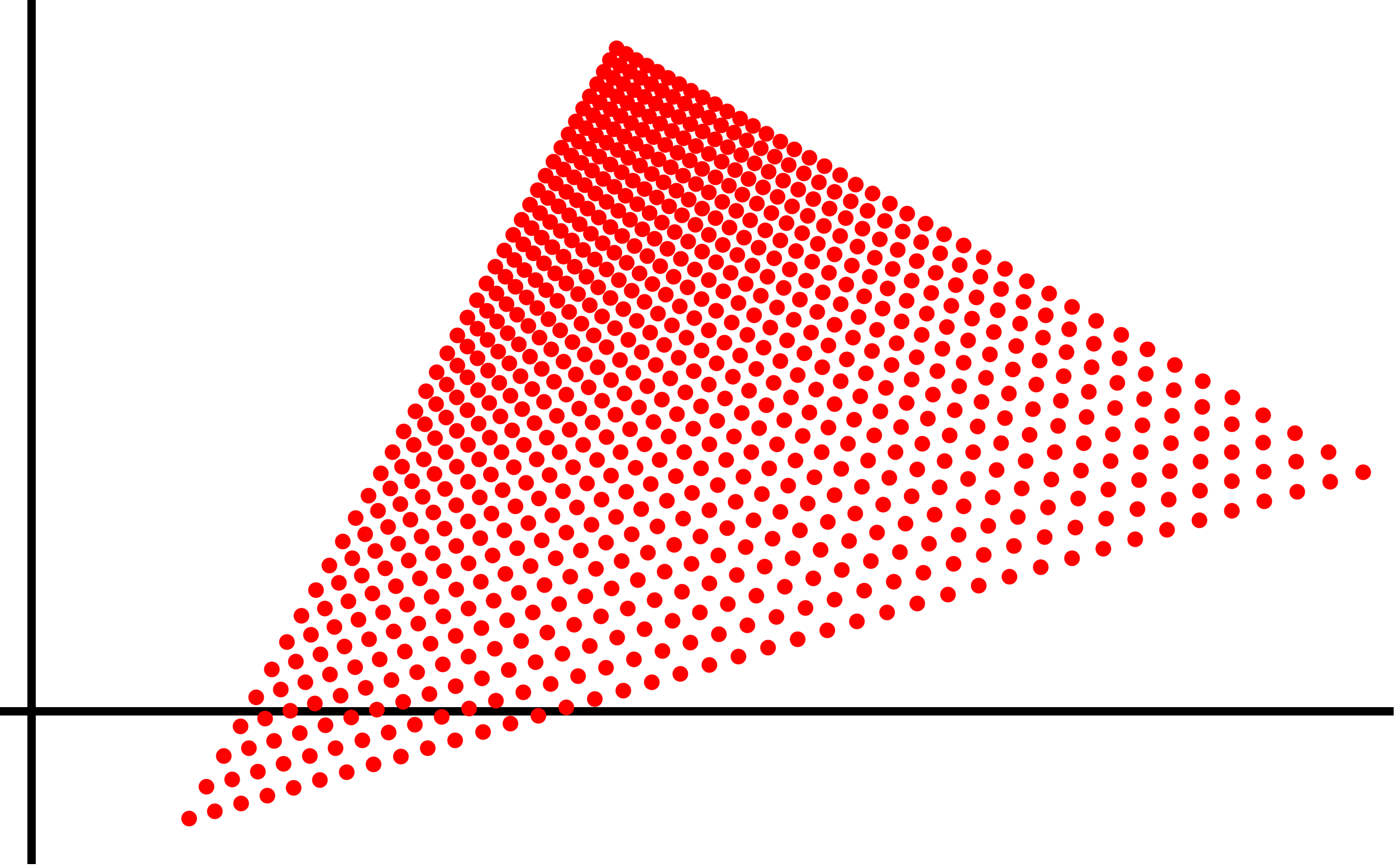

A map $\Delta^n\to \mathbb{R}^m$ which is not affine-linear but preserves straight line segments. These arise when writing the payoff vector of a repeated game as a function of one player’s randomized action at a given history. All other strategy components must be fixed. I first saw this interesting property for 2x2 games in this paper. It has a neat proof for discounted Markov decision processes, with vector-valued payoffs based on the current state.

[See also Joseph LaPorte.]